What is DevOps;

DevOps is a Software Development Strategy, that bridges the gap between the Dev and the Ops side of the company.

* It’s not a tool, it’s a Methodology to bridge the gap b/w development and operations teams!

As there is always a lot of conflicts between the two teams;

- E.g. a Software build works fine in a Developer laptop or machine but doesn’t work in the test or the production environment.

- Moreover, Dev team wants agility whereas the ops team wants stability.

So there are many other conflicts like these between the dev and off the side of the company which resolved with the help of DevOps.

DevOps Scenarios;

As the development of multi-tier apps is always challenging, so a proper discipline, communication, and transparency required between teams to go for success.

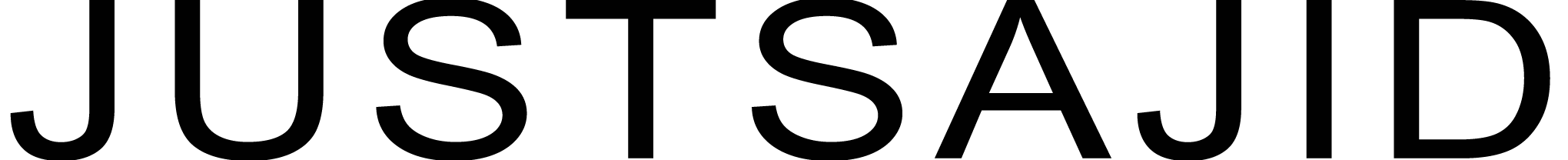

As mentioned above, the DevOps is an organizational capability focused on the agile relationship among the business, development, test and operations organizations. And as the ultimate goal of DevOps is to deliver faster with higher quality in a continuous and integrated manner. So for that purpose, different teams and organized choose appropriate scenarios to align best as per their comfort and ease.

Talking about scenarios, if we talk about DevOps scenarios can easily be followed with IBM services, following are the scenarios;

Perhaps again, the idea is to;

- Accelerate Delivery

- Balance Speed, Cost, Quality, and Risk.

- Reduce Time to Feedback.

So, by following these motivations, we can review the process follows supporting a sequential approach to mapping the various activities.

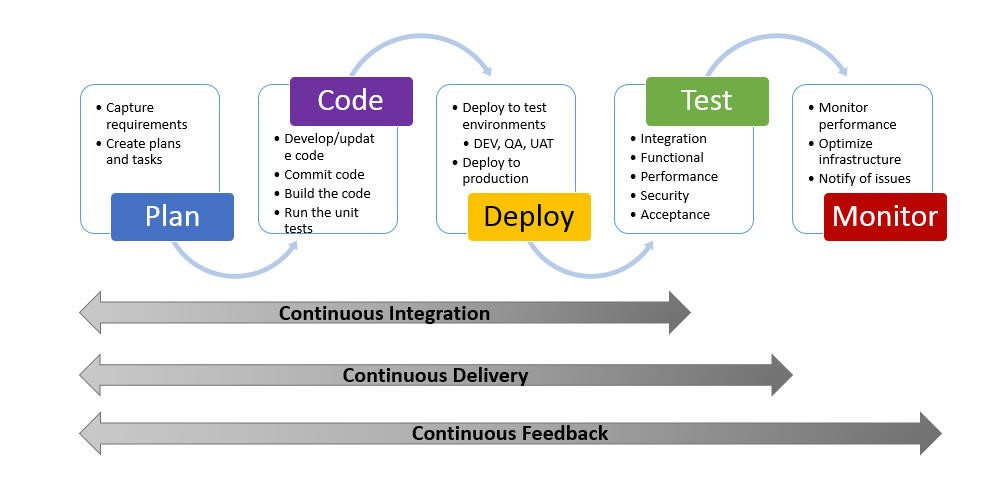

Continuous Delivery

To implement new updates to the project, the following scenario defines steps to efficiently implement a code change while explicitly validating the value delivered to the business;

Working with projects, several loops mentioned above can exist between several of these activities resulting from the collaborative and interconnected nature of a DevOps flow.

Continuous Monitoring

This is totally related to the production side of the party. As production environments are key to running the business. When a production environment encounters a problem, it needs to be resolved quickly with high quality and with safeguards to ensure the problem does not recur.

Because of the criticality of production systems, transparency is key to development. Operations and management will want to know what happened;

- How the problem is being looked at?

- How and when it is being resolved.

- When did this happen?

Having a guided set of steps to resolve the issue helps ensure communication, transparency, and quality in resolving the issue.

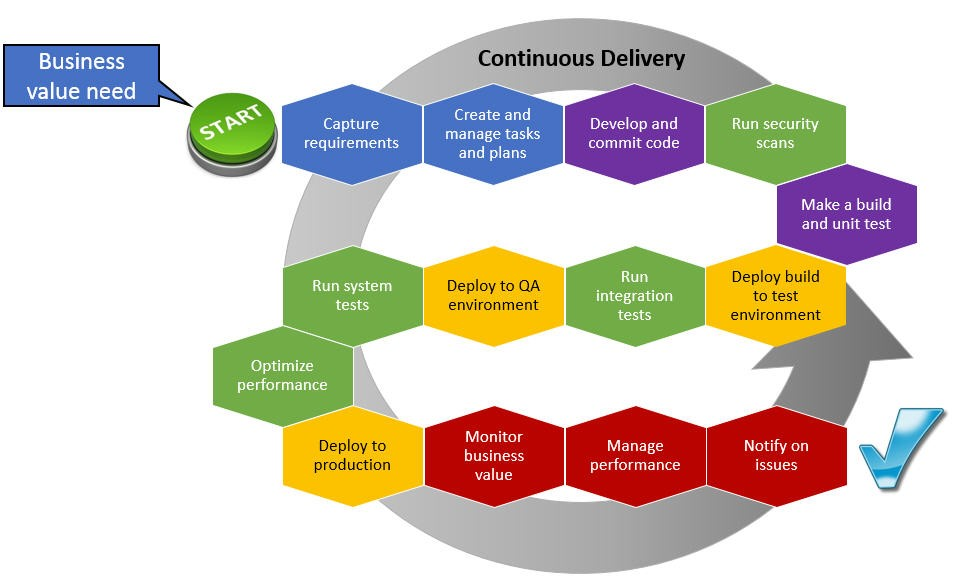

Continuous Feedback

This is related to if you have several innovative ideas on how to approach a solution but not sure which ones your customers would prefer. : – )

This is important when we require confirmation that business objectives can be met before you invest too heavily.

- Use the Innovation scenario to determine which outcome best fits your customer needs.

- The innovation scenario provides a guide on how to take different possible solutions.

- Narrow down to the right approach to your solution using real customer usage feedback.

Continuous Integration

This is related to the use the Quality Improvement scenario to “shift left” your testing activities. These shift-left practices to help to avoid rework, delays, and churn that can occur when major defects are discovered late in the testing cycle–after all integrations and product components are finally brought together as a composite application and made available for the team to test.

It helps with if any of the dependent application components are not available to test, virtual services can mimic the real components’ behavior until they are ready.

Further its extremely helpful if;

- Our teams finding high-impact defects late in the lifecycle, or even in production.

- Teams discover a lot of unreproducible defects in their different test environments.

- Teams waiting to test until all the systems are available

Scaled Agile Framework

If we challenged with scaling agile practices across a growing set of inter-dependent teams, required to tightly collaborate towards the delivery of complex releases or systems, then we are likely looking to adopt a SAFe (Scaled Agile Framework) style development model.

SAFe is a structure for applying agile development practices to teams-of-teams working in programs. The framework describes how to drive business value that is captured at the enterprise portfolio level down to its implementation across programs and teams.

The IBM implementation of SAFe, combined with our scenario for program-level delivery to production, helps ensure timely and cohesive delivery of large-scale business value.

By further breakdown some major activities of the lifecycle we can further clear on each point.

- Planning

We get the requirement from the client, we start planning our application. Once planning is done, we start writing the code;

- Code

Now there are multiple developers who are writing code for that particular application so we need a system that can manage the code.

- E.g. If we want to go back to the previous commit, how can I do that? or if I want to check the previous version of the code.

- Basically, to manage the source code, we’ve multiple tools like Git.

- Git is a decentralized version control tool.

- After that, the code is built.

- Basically, to manage the source code, we’ve multiple tools like Git.

- Build;

Now the build life cycle includes a lot of things, it includes;

- Validation of our application.

- Compiling our applications.

- Packaging our applications,

- Performing unit tests integration tests,

All those things are done in this phase.

For the build, we’ve multiple tools like Maven and gradle depending on the kind of application we have. Like for Java, we go for Maven.

Then the build application is deployed onto the test servers for testing

- Test;

Different types of tests performed in this phase. For testing that is the end-user testing, or we can consider it as functional testing, in this case, we use selenium or JUnit.

Once testing is done, it is deployed onto the production servers

- Deploy;

It deploys to production servers for release and after that, it is continuously monitored by tools like Nagios and Splunk.

After that in order to deploy the application onto the production server, there’re tools like puppet and salsa, tools like these are called configuration management tools.

The major aim is to provision and deploy our application to provision our nodes.

So all of these four tools have a similar architecture that is a master/slave architecture in which I can write the scripts for a particular Software stack in the master and deploy it onto the nodes.

So that’s how can maintain an accurate historical record of a previous system state as well. So if we want to roll back to the previous version of the software stack, we can easily do that as well. Because everything is well documented in the master.

These’re the tools we called configuration management tools and they play major role in DevOps

- Operate

For example, in the test server, we might require a particular software stack may be a lampstack, so we can write scripts using these tools and can deploy it onto the test servers as well as in the production servers.

- Monitor

Continuously monitored by tools like Nagios and Splunk.

The Integration Phase;

The heart of DevOps is this integration phase. We’ve continuous integration tools available over the Cloud with a number of plugins for various development testing and deployment technologies.

Docker role in DevOps

For docker, it is basically a containerization platform.

- We can create a docker containers which provide a consistent computing environment throughout the HDLC lifecycle plus it basically containerized our application and has a lot of advantages over the VMs

- Docker is an ideal environment to have a micro-service architecture.

So basically integration is integrating all the stages that we’ve seen above in the image, all the stages.

- The moment any developer makes a change in the source code in the shared repository, cloud tools will pull that code and prepare a build, once the build lifecycle is complete, it will deploy that build application on the test servers for testing, then it will deploy it onto the production servers for release.

- And finally it will be continuously monitored by tools which will give the feedback and it will notify the concern teams about it. And that is what continues deployment is.

Implement Docker in DevOps;

So there are two ways in which we can use Docker in DevOps.

- So a developer writes a project code in a docker file, for example, a requirement for a microservice whatever the requirement for the application is, a developer will write a simple docker file, right;

- Once the docker file is written, we can build docker images from that and with that docker image we build as many continaers as we want.

Now containers are nothing but an environment where we can run our application.

- E.g. if we require a Linux environment, we can run a Linus docker contianer. Similarly, that depends on the kind of environment that we want.

- Then what we can do is, we can upload the docker image onto docker hub. And through docker hub, various teams from staging or production can pull that docker image and prepare as many contianers as they want.

* Docker hub is nothing but a git repository of docker images!

- Now with this architecture, the advantage is, whatever was there in the developers laptop or machine, we were able to replicate it in the staging as well as in the production environment.

Similarly,

There is one more way we can use docker.

2. If we write a complex requirement for a Microservice in a docker file, upload that onto the git repository, so from the git repository, Jenkins can pull that docker file and build whatever was there in the developers laptop or machine into testing, staging, and production.

CI/CD – (Continous Integration / Continous Delivery) and Continous Deployment.

#Image4

CI – Continous Integration;

So the moment any developer commits a change in the source code, the continuous integration tools like Jenkins can pull that code and prepare a build.

Once build is done, it can perform unit and integration testing as well.

Till this stage, its called continuous integration. (As we’re not deploying our app on to any server even for testing purpose, even for functional, regression testing) – So this is basically an integration stage.

CD – Continous Delivery

Now we can do next is, automatically deploy that on the test environment to perform acceptance testing or we can even call in UAT (User acceptance testing) or end-user test.

So once we do that in an automation way, it becomes continuous delivery.

CD – Continous Deployment

It’s basically deploying our application after testing into production for release. So continuous deployment is something which is not a good practice because even after the end-user testing, there might be some checks that we need to do or we need to market our application etc…

So we may want to perform a lot of checks before the release it in the market.

So that’s why continuous deployment is not a good practice perhaps continuous delivery, is what every company tries to achieve nowadays.

so the moment any developer commits a code and till the unit and integration test where our application is not deployed on to any server or any environment, it is integration testing, once it is deployed to the test environment for UAT, it becomes Continous Delivery, and when it is deployed to production for release then it becomes continuous deployment.

The blog is compiled with the help of an article from Marianne Hollier, Cindy VanEpps, Roger LeBlanc and Anita Rass Wan.